The Android | What is Android | Androids | Android Market | App for Android | Android Apps | Android Application | Download Android | Tablet Android | Tablet PC | Phone Android | Android 2.2 | Android 2.3 | Android Free | Games for Android | Android Samsung | Galaxy Android | Android Google | Games Android | アンドロイド | Android | Android 2 | Android PC | Google Android | Android Galaxy | Phones with Android | Which Android Phone | What is an Android Tablet | What is an Android Phone

Ho Ho Ho! Follow Santa's journey around the world on your phone

This year, no one needs to go to bed early to make sure Santa comes over. Since NORAD is tracking Santa's journey around the world, you can find his current location on the 24th. If you see he's getting close by, just hop into bed. And if he's already passed by your house but you don't yet see presents (or coal!) under the tree, rest assured he'll be looping back once you're asleep. Read more about how NORAD tracks Santa on our Official Google Blog.

To make following Santa's journey even easier, you can find him on your phone too. Make sure you have Google Maps for mobile (available for most phones). Then just search! Just as you'd put in a query for "pizza" to find pizza places, or "San Antonio" to find it on a map, you can search for "Santa" to find where he is at the time. This way, you can stay up to date whether you're lounging by the fire at a ski lodge, stuck in traffic en route to Grandma's (get your kids to look it up for you!), or at the dinner table. To get started, go to m.noradsanta.org on your mobile phone, or just search for "Santa" in Google Maps for mobile on December 24th.

Matt Aldridge, Mobile Elf

Hello, Stack Overflow!

Over the past year, an Android presence has been growing on a relatively new technical Q&A web site called Stack Overflow. The site was designed specifically for programmers, with features like syntax highlighting, tagging, user reputation, and community editing. It's attracted a loyal software developer community, and developers continue to express great praise for this new tool. Well, the Android team has been listening...and we agree.

Today, I'm happy to announce that we're working with Stack Overflow to improve developer support, especially for developers new to Android. In essence, the Android tag on Stack Overflow will become an official Android app development Q&A medium. We encourage you to post your beginner-level technical questions there. It's also important to point out that we don't plan to change the info-android-phone group, so intermediate and expert users should still feel free to post there.

I think that this will be a great new resource for novice Android developers, and our team is really excited to participate in the growth of the Android developer community on Stack Overflow. I hope to see you all there!

Back and other hard keys: three stories

Android 2.0 introduces new behavior and support for handling hard keys such as BACK and MENU, including some special features to support the virtual hard keys that are appearing on recent devices such as Droid.

This article will give you three stories on these changes: from the most simple to the gory details. Pick the one you prefer.

Story 1: Making things easier for developers

If you were to survey the base applications in the Android platform, you would notice a fairly common pattern: add a little bit of magic to intercept the BACK key and do something different. To do this right, the magic needs to look something like this:

@Override

public boolean onKeyDown(int keyCode, KeyEvent event) {

if (keyCode == KeyEvent.KEYCODE_BACK && event.getRepeatCount() == 0) {

// do something on back.

return true;

}

return super.onKeyDown(keyCode, event);

}

How to intercept the BACK key in an Activity is also one of the common questions we see developers ask, so as of 2.0 we have a new little API to make this more simple and easier to discover and get right:

@Override

public void onBackPressed() {

// do something on back.

return;

}

If this is all you care about doing, and you're not worried about supporting versions of the platform before 2.0, then you can stop here. Otherwise, read on.

Story 2: Embracing long press

One of the fairly late addition to the Android platform was the use of long press on hard keys to perform alternative actions. In 1.0 this was long press on HOME for the recent apps switcher and long press on CALL for the voice dialer. In 1.1 we introduced long press on SEARCH for voice search, and 1.5 introduced long press on MENU to force the soft keyboard to be displayed as a backwards compatibility feature for applications that were not yet IME-aware.

(As an aside: long press on MENU was only intended for backwards compatibility, and thus has some perhaps surprising behavior in how strongly the soft keyboard stays up when it is used. This is not intended to be a standard way to access the soft keyboards, and all apps written today should have a more standard and visible way to bring up the IME if they need it.)

Unfortunately the evolution of this feature resulted in a less than optimal implementation: all of the long press detection was implemented in the client-side framework's default key handling code, using timed messages. This resulted in a lot of duplication of code and some behavior problems; since the actual event dispatching code had no concept of long presses and all timing for them was done on the main thread of the application, the application could be slow enough to not update within the long press timeout.

In Android 2.0 this all changes, with a real KeyEvent API and callback functions for long presses. These greatly simplify long press handling for applications, and allow them to interact correctly with the framework. For example: you can override Activity.onKeyLongPress() to supply your own action for a long press on one of the hard keys, overriding the default action provided by the framework.

Perhaps most significant for developers is a corresponding change in the semantics of the BACK key. Previously the default key handling executed the action for this key when it was pressed, unlike the other hard keys. In 2.0 the BACK key is now execute on key up. However, for existing apps, the framework will continue to execute the action on key down for compatibility reasons. To enable the new behavior in your app you must set android:targetSdkVersion in your manifest to 5 or greater.

Here is an example of code an Activity subclass can use to implement special actions for a long press and short press of the CALL key:

@Override

public boolean onKeyLongPress(int keyCode, KeyEvent event) {

if (keyCode == KeyEvent.KEYCODE_CALL) {

// a long press of the call key.

// do our work, returning true to consume it. by

// returning true, the framework knows an action has

// been performed on the long press, so will set the

// canceled flag for the following up event.

return true;

}

return super.onKeyLongPress(keyCode, event);

}

@Override

public boolean onKeyUp(int keyCode, KeyEvent event) {

if (keyCode == KeyEvent.KEYCODE_CALL && event.isTracking()

&& !event.isCanceled()) {

// if the call key is being released, AND we are tracking

// it from an initial key down, AND it is not canceled,

// then handle it.

return true;

}

return super.onKeyUp(keyCode, event);

}

Note that the above code assumes we are implementing different behavior for a key that is normally processed by the framework. If you want to implement long presses for another key, you will also need to override onKeyDown to have the framework track it:

@Override

public boolean onKeyDown(int keyCode, KeyEvent event) {

if (keyCode == KeyEvent.KEYCODE_0) {

// this tells the framework to start tracking for

// a long press and eventual key up. it will only

// do so if this is the first down (not a repeat).

event.startTracking();

return true;

}

return super.onKeyDown(keyCode, event);

}

Story 3: Making a mess with virtual keys

Now we come to the story of our original motivation for all of these changes: support for virtual hard keys, as seen on the Droid and other upcoming devices. Instead of physical buttons, these devices have a touch sensor that extends outside of the visible screen, creating an area for the "hard" keys to live as touch sensitive areas. The low-level input system looks for touches on the screen in this area, and turns these into "virtual" hard key events as appropriate.

To applications these basically look like real hard keys, though the generated events will have a new FLAG_VIRTUAL_HARD_KEY bit set to identify them. Regardless of that flag, in nearly all cases an application can handle these "hard" key events in the same way it has always done for real hard keys.

However, these keys introduce some wrinkles in user interaction. Most important is that the keys exist on the same surface as the rest of the user interface, and they can be easily pressed with the same kind of touches. This can become an issue, for example, when the virtual keys are along the bottom of the screen: a common gesture is to swipe up the screen for scrolling, and it can be very easy to accidentally touch a virtual key at the bottom when doing this.

The solution for this in 2.0 is to introduce a concept of a "canceled" key event. We've already seen this in the previous story, where handling a long press would cancel the following up event. In a similar way, moving from a virtual key press on to the screen will cause the virtual key to be canceled when it goes up.

In fact the previous code already takes care of this — by checking isCanceled() on the key up, canceled virtual keys and long presses will be ignored. There are also individual flags for these two cases, but they should rarely be used by applications and always with the understanding that in the future there may be more reasons for a key event to be canceled.

For existing application, where BACK key compatibility is turned on to execute the action on down, there is still the problem of accidentally detecting a back press when intending to perform a swipe. Though there is no solution for this except to update an application to specify it targets SDK version 5 or later, fortunately the back key is generally positioned on a far side of the virtual key area, so the user is much less likely to accidentally hit it than some of the other keys.

Writing an application that works well on pre-2.0 as well as 2.0 and later versions of the platform is also fairly easy for most common cases. For example, here is code that allows you to handle the back key in an activity correctly on all versions of the platform:

@Override

public boolean onKeyDown(int keyCode, KeyEvent event) {

if (android.os.Build.VERSION.SDK_INT < android.os.Build.VERSION_CODES.ECLAIR

&& keyCode == KeyEvent.KEYCODE_BACK

&& event.getRepeatCount() == 0) {

// Take care of calling this method on earlier versions of

// the platform where it doesn't exist.

onBackPressed();

}

return super.onKeyDown(keyCode, event);

}

@Override

public void onBackPressed() {

// This will be called either automatically for you on 2.0

// or later, or by the code above on earlier versions of the

// platform.

return;

}

For the hard core: correctly dispatching events

One final topic that is worth covering is how to correctly handle events in the raw dispatch functions such as onDispatchEvent() or onPreIme(). These require a little more care, since you can't rely on some of the help the framework provides when it calls the higher-level functions such as onKeyDown(). The code below shows how you can intercept the dispatching of the BACK key such that you correctly execute your action when it is release.

@Override

public boolean dispatchKeyEvent(KeyEvent event) {

if (event.getKeyCode() == KeyEvent.KEYCODE_BACK) {

if (event.getAction() == KeyEvent.ACTION_DOWN

&& event.getRepeatCount() == 0) {

// Tell the framework to start tracking this event.

getKeyDispatcherState().startTracking(event, this);

return true;

} else if (event.getAction() == KeyEvent.ACTION_UP) {

getKeyDispatcherState().handleUpEvent(event);

if (event.isTracking() && !event.isCanceled()) {

// DO BACK ACTION HERE

return true;

}

}

return super.dispatchKeyEvent(event);

} else {

return super.dispatchKeyEvent(event);

}

}

The call to getKeyDispatcherState() returns an object that is used to track the current key state in your window. It is generally available on the View class, and an Activity can use any of its views to retrieve the object if needed.

New resources and sample code on developer.android.com

Hey Android developers—if you've visited the online Android SDK documentation recently, you may have noticed a few changes. That's right, there's a new Resources tab, which was designed to take some of the load off the Developer's Guide. We've moved a number of existing resources to the Resources tab, including tutorials, sample code, and FAQs. We've also formalized a few of our most popular developer blog posts into technical articles; watch for more of these to appear in the future.

In addition, we just released a new batch of sample code, available now as a ZIP file download on the samples index page. And we're working on updating the way in which we distribute official sample code; more on that some other time.

The new sample code includes:

- Multiple Resolutions: a simple example showing how to use resource directory qualifiers to support multiple screen configurations and Android SDK versions.

- Wiktionary and WiktionarySimple: sample applications that illustrate how to create an interactive home screen widget.

- Contact Manager: an example on using the new ContactsContract interface to query and manipulate a user's various accounts and contact providers.

- Bluetooth Chat: a fun little demo that allows two users to have a 1 on 1 chat over Bluetooth. It demonstrates how to discover devices, initiate a connection, and transfer data.

- API Demos > App > Activity > QuickContactsDemo: a demo showing how to use the

android.widget.QuickContactsBadgeclass, new in Android 2.0. - API Demos > App > Activity > SetWallpaper: a demo showing how to use the new

android.app.WallpaperManagerclass to allow users to change the system wallpaper. - API Demos > App > Text-To-Speech: a sample using Text-To-Speech (speech synthesis) to make your application talk.

- NotePad (now with Live Folders): this sample now includes code for creating Live Folders.

We hope these new samples can be a valuable resource for learning some of the newer features in Android 1.6 and 2.0. Let us know in the info-android-phone Google Group if you have any questions about these new samples or about the new Resources tab.

Thanks for tuning in, and 'til next time, happy coding!

The Iterative Web App: Feature-Rich and Fast

A growing number of mobile devices ship with an all-important feature: a modern web browser. And this is significant for two reasons:

- As an engineering team, we can build a single app with HTML and JavaScript, and have it "just work" across many mobile operating systems. The cost savings are substantial, not to mention the time you can re-invest in user-requested features.

- Having a web application also means we can launch products and features as soon as they're ready. And for users, the latest version of the app is always just a URL and a refresh away.

Over the past 8 months we've pushed the limits of HTML5 to launch a steady string of Gmail features, including:

- Full label support

- Swipe to Archive

- Smart Links

- Faster address auto-complete

- Move and Enhanced Refresh

- Outbox

- Auto-expanding compose boxes

- And many more...

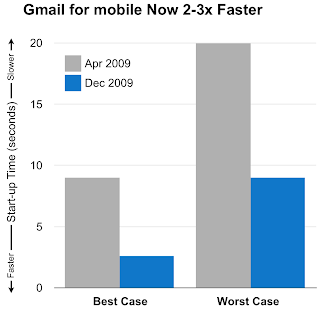

As of today, and thanks to numerous optimizations, I'm happy to report that Gmail for mobile loads 2-3x faster than it did in April (see Figure 1). In fact on newer iPhone and Android devices, the app now loads in under 3 seconds. So yes, the mobile web can deliver really responsive applications.

Figure 1: Best and Worst Case Gmail for mobile start-up times, April 2009 vs. December 2009. All figures recorded on an iPhone 3G with EDGE data access.

The Gmail for mobile team isn't done, of course. We've focused primarily on performance over the past few months, but many other features and optimizations are on the way. So keep visiting gmail.com for the latest and greatest version of the app.

Looking ahead, it's also worth noting that as a worldwide mobile team, we'll continue to build native apps where it makes sense. But we're incredibly optimistic about the future of the mobile web -- both for developers and for the users we serve.

Posted by Alex Nicolaou, Engineering Manager

Knowing is half the battle

As a developer, I often wonder which Android platforms my applications should support,especially as the number of Android-powered devices grows. Should my application only focus on the latest version of the platform or should it support older ones as well?

To help with this kind of decision, I am excited to announce the new

device dashboard. It provides information about deployed Android-powered devices that is helpful to developers as they build and update their apps. The dashboard provides the relative distribution of Android platform versions on devices running Android Market.

|

The above graph shows the relative number of Android devices that have accessed Android Market during the first 14 days of December 2009.

From a developer's perspective, there are a number of interesting points on this graph:

- At this point, there's little incentive to make sure a new application is

backward compatible with Android 1.0 and Android 1.1. - Close to 30% of the devices are running Android 1.5. To take advantage of this significant install base, you may consider support for Android 1.5.

- Starting with Android 1.6, devices can have different screen densities & sizes. There are several devices out there that fall in this category, so make sure to adapt your application to support different screen sizes and take advantage of devices with small, low density (e.g QVGA) and normal, high density (e.g. WVGA) screens. Note that Android Market will not list your application on small screen devices unless its manifest explicitly indicates support for "small" screen sizes. Make sure you properly configure the emulator and test your application on different screen sizes before uploading to Market.

- A new SDK for Android 2.0.1 was released two weeks ago. All Android 2.0 devices will be updated to 2.0.1 before the end of the year, so if your application uses features specific to Android 2.0, you are encouraged to update it to take advantage of the latest Android 2.0.1 API instead.

In summary, Android 1.5, 1.6, and 2.0.1 are the 3 versions of the platform that are deployed in volume. Our goal is to provide you with the tools and information to make it easy for you to target specific versions of the platform or all the versions that are deployed in volume.

We plan to update the dashboard regularly to reflect deployment of new Android platforms. We also plan to expand the dashboard to include other information like devices per screen size and so on.

An Android dogfood diet for the holidays

Tis the Season to be Merry and Mobile

Come to Our Virtual Office Hours

Starting this week, we're going to be holding regular IRC office hours for Android app developers in the #android-dev channel on irc.freenode.net. Members of the Android team will be on hand to answer your technical questions. (Note that we will not be able to provide customer support for the phones themselves.)

We've arranged our office hours to accommodate as many different schedules as possible, for folks around the world. We will initially hold two sessions each week:

- 12/15/09 Tuesday, 9 a.m. to 10 a.m. PST

- 12/17/09, Thursday 5 p.m. to 6 p.m. PST

- 12/22/09, Tuesday 9 a.m. to 10 a.m. PST

- 01/06/10 Wednesday 9 a.m. to 10 a.m. PST

- 01/07/10 Thursday 5 p.m. to 6 p.m. PST

Check Wikipedia for a helpful list of IRC clients. Alternatively, you could use a web interface such as the one at freenode.net. We will try to answer as many as we can get through in the hour.

We hope to see you there!

Optimize your layouts

Writing user interface layouts for Android applications is easy, but it can sometimes be difficult to optimize them. Most often, heavy modifications made to existing XML layouts, like shuffling views around or changing the type of a container, lead to inefficiencies that go unnoticed.

$ layoutopt samples/

samples/compound.xml

7:23 The root-level <FrameLayout/> can be replaced with <merge/>

11:21 This LinearLayout layout or its FrameLayout parent is useless samples/simple.xml

7:7 The root-level <FrameLayout/> can be replaced with <merge/>

samples/too_deep.xml

-1:-1 This layout has too many nested layouts: 13 levels, it should have <= 10!

20:81 This LinearLayout layout or its LinearLayout parent is useless

24:79 This LinearLayout layout or its LinearLayout parent is useless

28:77 This LinearLayout layout or its LinearLayout parent is useless

32:75 This LinearLayout layout or its LinearLayout parent is useless

36:73 This LinearLayout layout or its LinearLayout parent is useless

40:71 This LinearLayout layout or its LinearLayout parent is useless

44:69 This LinearLayout layout or its LinearLayout parent is useless

48:67 This LinearLayout layout or its LinearLayout parent is useless

52:65 This LinearLayout layout or its LinearLayout parent is useless

56:63 This LinearLayout layout or its LinearLayout parent is useless

samples/too_many.xml

7:413 The root-level <FrameLayout/> can be replaced with <merge/>

-1:-1 This layout has too many views: 81 views, it should have <= 80! samples/useless.xml

7:19 The root-level <FrameLayout/> can be replaced with <merge/>

11:17 This LinearLayout layout or its FrameLayout parent is useless

layoutopt.bat in the tools directory of the SDK and on the last line, replace %jarpath% with -jar %jarpath%.New version of Google Mobile App for iPhone in the App Store

In this version, we have a redesigned search results display that shows more results at once and, more importantly, opens web pages from the results within the app. This will get you to what you need faster, which is always our goal at Google.

For those less utilitarian and more flamboyant, we've exposed our visual tweaks settings called "Bells and Whistles" - some of our users had discovered this already in previous versions. You can style your Google Mobile App in any shade: red, taupe, or even heliotrope. If you're on a faster iPhone, like the iPhone 3GS, you may want to try the live waveform setting which turns on, as the name suggests, a moving waveform when you search by voice.

On the subject of searching by voice, you can now choose your spoken language or accent. For example, if you're Australian but live in London, you can improve the recognition accuracy by selecting Australian in the Voice Search settings. And now both Mandarin and Japanese are supported languages as well.

If you don't have Google Mobile App yet, download it from the App Store or read more about it. If you have any suggestions or comments, feel free to join in on our support forums or suggest ideas in our Mobile Products Ideas page. You can also follow us on Twitter @googlemobileapp.

Posted by Alastair Tse, Software Engineer

Mobile Search for a New Era: Voice, Location and Sight

A New Era of Computing

Mobile devices straddle the intersection of three significant industry trends: computing (or Moore's Law), connectivity, and the cloud. Simply put:

- Phones get more powerful and less expensive all the time

- They're connected to the Internet more often, from more places; and

- They tap into computational power that's available in datacenters around the world

Just think: with a sensor-rich phone that's connected to the cloud, users can now search by voice (using the microphone), by location (using GPS and the compass), and by sight (using the camera). And we're excited to share Google's early contributions to this new era of computing.

Search by Voice

We first launched search by voice about a year ago, enabling millions of users to speak to Google. And we're constantly reminded that the combination of a powerful device, an Internet connection, and datacenters in the cloud is what makes it work. After all:

- We first stream sound files to Google's datacenters in real-time

- We then convert utterances into phonemes, into words, into phrases; and

- We then compare phrases against Google's billions of daily queries to assign probability scores to all possible transcriptions; and

- We do all of this in the time it takes to speak a few words

Over the past 12 months we've introduced the product on many more devices, in more languages, with vastly improved accuracy rates. And today we're announcing that search by voice understands Japanese, joining English and Mandarin.

Over the past 12 months we've introduced the product on many more devices, in more languages, with vastly improved accuracy rates. And today we're announcing that search by voice understands Japanese, joining English and Mandarin.Looking ahead, we dream of combining voice recognition with our language translation infrastructure to provide in-conversation translation [video]-- a UN interpreter for everyone! And we're just getting started.

Search by Location

Your phone's location is usually your location: it's in your pocket, in your purse, or on your nightstand, and as a result it's more personal than any PC before it. This intimacy is what makes location-based services possible, and for its part, Google continues to invest in things like My Location, real-time traffic, and turn-by-turn navigation. Today we're tackling a question that's simple to ask, but surprisingly difficult to answer: "What's around here, anyway?"

Suppose you're early to pickup your child from school, or your drive to dinner was quicker than expected, or you've just checked into a new hotel. Chances are you've got time to kill, but you don't want to spend it entering addresses, sifting through POI categories, or even typing a search. Instead you just want stuff nearby, whatever that might be. Your location is your query, and we hear you loud and clear.

Today we're announcing "What's Nearby" for Google Maps on Android 1.6+ devices, available as an update from Android Market. To use the feature just long press anywhere on the map, and we'll return a list of the 10 closest places, including restaurants, shops and other points of interest. It's a simple answer to a simple question, finally. (And if you visit google.com from your iPhone or Android device in a few weeks, clicking "Near me now" will deliver the same experience [video].)

Today we're announcing "What's Nearby" for Google Maps on Android 1.6+ devices, available as an update from Android Market. To use the feature just long press anywhere on the map, and we'll return a list of the 10 closest places, including restaurants, shops and other points of interest. It's a simple answer to a simple question, finally. (And if you visit google.com from your iPhone or Android device in a few weeks, clicking "Near me now" will deliver the same experience [video].)Of course our future plans include more than just nearby places. In the new year we'll begin showing local product inventory in search results [video]; and Google Suggest will even include location-specific search terms [video]. All thanks to powerful, Internet-enabled mobile devices.

Search by Sight

When you connect your phone's camera to datacenters in the cloud, it becomes an eye to see and search with. It sees the world like you do, but it simultaneously taps the world's info in ways that you can't. And this makes it a perfect answering machine for your visual questions.

Perhaps you're vacationing in a foreign country, and you want to learn more about the monument in your field of view. Maybe you're visiting a modern art museum, and you want to know who painted the work in front of you. Or maybe you want wine tasting notes for the Cabernet sitting on the dinner table. In every example, the query you care about isn't a text string, or a location -- it's whatever you're looking at. And today we're announcing a Labs product for Android 1.6+ devices that lets users search by sight: Google Goggles.

In a nutshell, Goggles lets users search for objects using images rather than words. Simply take a picture with your phone's camera, and if we recognize the item, Goggles returns relevant search results. Right now Goggles identifies landmarks, works of art, and products (among other things), and in all cases its ability to "see further" is rooted in powerful computing, pervasive connectivity, and the cloud:

- We first send the user's image to Google's datacenters

- We then create signatures of objects in the image using computer vision algorithms

- We then compare signatures against all other known items in our image recognition databases; and

- We then figure out how many matches exist; and

- We then return one or more search results, based on available meta data and ranking signals; and

- We do all of this in just a few seconds

Computer vision, like all of Google's extra-sensory efforts, is still in its infancy. Today Goggles recognizes certain images in certain categories, but our goal is to return high quality results for any image. Today you frame and snap a photo to get results, but one day visual search will be as natural as pointing a finger -- like a mouse for the real world. Either way we've got plenty of work to do, so please download Goggles from Android Market and help us get started.

The Beginning of the Beginning

Posted by Vic Gundotra, Vice President of Engineering

Android SDK Updates

Today we are releasing updates to multiple components of the Android SDK:

- Android 2.0.1, revision 1

- Android 1.6, revision 2

- SDK Tools, revision 4

Android 2.0.1 is a minor update to Android 2.0. This update includes several bug fixes and behavior changes, such as application resource selection based on API level and changes to the value of some Bluetooth-related constants. For more detailed information, please see the Android 2.0.1 release notes.

To differentiate its behavior from Android 2.0, the API level of Android 2.0.1 is 6. All Android 2.0 devices will be updated to 2.0.1 before the end of the year, so developers will no longer need to support Android 2.0 at that time. Of course, developers of applications affected by the behavior changes should start compiling and testing their apps immediately.

We are also providing an update to the Android 1.6 SDK component. Revision 2 includes fixes to the compatibility mode for applications that don't support multiple screen sizes, as well as SDK fixes. Please see the Android 1.6, revision 2 release notes for the full list of changes.

Finally, we are also releasing an update to the SDK Tools, now in revision 4. This is a minor update with mostly bug fixes in the SDK Manager. A new version of the Eclipse plug-in that embeds those fixes is also available. For complete details, please see the SDK Tools, revision 4 and ADT 0.9.5 release notes.

One more thing: you can now follow us on twitter @AndroidDev.

Keep your starred items in sync with Google Maps

Google Maps for mobile has long allowed you to add stars on a map to mark your favorite places. You may have noticed a few months ago that Google Maps for desktop browsers introduced the ability to star places as well. Unfortunately, there was no way to keep these starred places in sync with Google Maps on your phone. With today's release of Google Maps for mobile 3.3 on Windows Mobile and Symbian phones, you'll now be able to keep the starred places on your phone and on your computer completely synchronized. It's like magic, but magic that you can use. Let me show you how:

My colleague Andy is at his desk right now, and he wants to check out some comedy in London tonight. Google Maps lists the 4th result as Upstairs at the Ritzy -- it sounds like a great spot: cheap, fun and comfortable. With one click, Andy stars the item and he's done. When he walks out of the office and turns on Google Maps on his Nokia phone, Upstairs at the Ritzy will be the top place in his list of Starred Items, and it will show up as a star on his map. From there he can call the theater, get walking directions, or even SMS the address to a friend.

Starring on Google Maps for desktop computers and Google Maps for mobile

Starring places also works great when you're out on the town and you find cool spots using your phone. I was in Paris with my wife recently. We visited the obvious tourist spots like la tour Eiffel and le Musée du Louvre, but we also found a few interesting places we hadn't expected. While wandering the streets of Paris, we stumbled upon a cafe...the sort of place you'll remember forever, but immediately forget the name. I started Google Maps on my Nokia phone, searched for the name of the cafe (Les Philosophes) and starred it, knowing that when I come back to Google Maps on my computer at home, it will be starred, right there, on my map. How cool is it to create a trail of interesting places from your phone?!

For users upgrading from an older version of Google Maps for mobile, you'll be asked, when you log in, whether you'd like to synchronize your existing starred items with your Google Account. This means you can preserve all the work you've put into customizing your map on your mobile, and have it show up, conveniently, in Google Maps in your desktop browser.

To enjoy the benefits of all this mobile synchronization goodness, download Google Maps for mobile for your Symbian or Windows Mobile phone by visiting m.google.com/maps in your mobile browser. And don't worry, we're busy building this same functionality into our other mobile versions of Google Maps -- so sit tight.

Posted by Flavio Lerda and Andy McEwan, Software Engineers

Announcing the Winners of ADC 2

Back in May at Google I/O, we announced ADC 2 -- the second Android Developer Challenge -- to encourage the development of cool apps that delight mobile users. We received many interesting and high-quality applications -- everything from exciting arcade games to nifty productivity utilities. We also saw apps that took advantage of openness of Android to enhance system behavior at a deep level to provide users with a greater degree of customization and utility. We were particularly pleased to see submissions from many smaller and independent developers.

Over the last couple of months, tens of thousands of Android users around the world reviewed and scored these applications. There were many great apps and the scores were very close. Together with our official panel of judges, these users have spoken and selected our winners!

I am pleased to present the ADC 2 winners gallery, which includes not only the top winners overall and in each category, but also all of the applications that made it to the top 200. There are a lot of great applications in addition to the top winners.

Thanks to everyone who submitted applications or helped us judge the entrants. We encourage all developers to submit their applications to Android Market where their app can be downloaded and enjoyed by Android users around the world.

Google Search by voice: Now in Times Square!

If you've been to Times Square in New York City over the past couple weeks, on any day from 12:30-2:00pm or 6:30-8:00pm, you may have noticed that Google Search by voice is powering Times Square's largest combined displays -- the Reuters Sign and the NASDAQ sign. Anyone can call 888-376-4336 and say the name of a business or a location that they want to search for, like "museum of modern art" or "pizza". Then, the query and local search results from Google will appear on one of the two electronic billboards. This is all part of Verizon's "Droid Does" campaign and has been developed in partnership with Reuters and R/GA, a digital advertising agency.

If you've been to Times Square in New York City over the past couple weeks, on any day from 12:30-2:00pm or 6:30-8:00pm, you may have noticed that Google Search by voice is powering Times Square's largest combined displays -- the Reuters Sign and the NASDAQ sign. Anyone can call 888-376-4336 and say the name of a business or a location that they want to search for, like "museum of modern art" or "pizza". Then, the query and local search results from Google will appear on one of the two electronic billboards. This is all part of Verizon's "Droid Does" campaign and has been developed in partnership with Reuters and R/GA, a digital advertising agency.On Black Friday, Times Square's gigantic interactive search-by-voice demo will be running for 20 hours straight. So if you're in the area and have a chance to take a break from your shopping, or if you want to see your next shopping destination displayed on a Google map on the huge signs, give the demo a try and let us know what you think. And for those of you that aren't in Manhattan on that day, you can still watch the action via webcam.

It's been quite a ride for the search by voice team -- from launching on the iPhone about a year ago, to our launches on BlackBerry and Android, and on S60 in Mandarin Chinese, to powering billboards in Times Square. We're thankful for the chance to work on technology that excites us and that can help more of you search faster and more easily on your phone. And we hope you've been noticing the ongoing improvements in the accuracy of our voice recognition. We can't wait to show you what we have in store for next year.

Happy Thanksgiving to all!

Posted by Mike LeBeau, Senior Software Engineer

Get movie trailers and more with Google Search for mobile

Our new movie listings page now includes buttons to play trailers right on your phone, ratings and categories, movie posters, upcoming showtimes, and a concise list of the nearest theaters and their distances from you. We keep information on this page succinct so you can quickly browse through shows and showtimes to help you decide which movie to see. If you want more details about a specific movie, just touch the poster or movie title and you'll see our new movie details page that has a synopsis of the movie, a more detailed list of showtimes, the cast and crew, and pictures. Watch our trailer for a quick demo:

When you browse by theater, you'll see a map of the theaters nearest to you. Then, just tap on the link to any particular theater to see what shows are playing there and what times they're playing. Of course, you can also search for specific movies or theaters and see their listings right away. Try searching for recent movies like "New Moon" or "Where the Wild Things Are" or search for "glendale 18 los angeles".

If you enjoy searching for movies with Google nearly as much as we have during testing, then this will be the beginning of a beautiful friendship. Our new search results for movies are available in English in the US, UK, Canada, Ireland, Australia, and New Zealand. As always, let us know your feedback. This conversation can serve no purpose anymore. Goodbye.

Posted by Nick Fey, User Experience Designer, Google mobile team

ADC 2 Public Judging is now closed

Thanks to tens of thousands of Android users around the world who participated in the review of ADC 2 finalist applications, we have now collected sufficient scores to complete Round 2 of public judging.

We are reviewing the final results and will announce the top winners this coming Monday, November 30. Thanks to all who've participated in ADC 2 and good luck to all the finalists.

The Iterative Web App: A new look for Gmail and Google mobile web apps

Some of you noticed and asked us about recent changes we made to Gmail for mobile and a few of our other mobile web apps. If you use the web browser to access Gmail, Latitude, Calendar, or Tasks on your Android-powered device or iPhone, you'll see that we freshened up the look of the buttons and toolbars.

We never want the buttons and toolbars of Google apps to compete with your content; rather, they should complement them. So the headers and buttons are now darker, to better show the content of your emails and calendar entries.

We also made the all the buttons a bit larger, for easier button-tapping.

We also made the all the buttons a bit larger, for easier button-tapping. To try these apps yourself, point your mobile browser to Gmail (gmail.com), Calendar (google.com/calendar), Latitude (google.com/latitude), Tasks (gmail.com/tasks), or just go to google.com from you phone and find all these web apps under the 'more' link.

To try these apps yourself, point your mobile browser to Gmail (gmail.com), Calendar (google.com/calendar), Latitude (google.com/latitude), Tasks (gmail.com/tasks), or just go to google.com from you phone and find all these web apps under the 'more' link.Is this an improvement? Let us know what you think.

by Charles Warren, User Experience Designer, Google Mobile

Google Apps Connector for BlackBerry Enterprise Server now connects businesses of all sizes

When we launched the Google Apps Connector for BlackBerry® Enterprise Server in August, we focused on addressing the needs of companies operating their own BlackBerry Enterprise Servers, typically supporting a couple hundred BlackBerry smartphone users per server.

Of course, companies of all sizes are adopting Apps, and their needs for supporting BlackBerry smartphones are as diverse as their businesses. So today we're making it easier for companies large and small to manage their BlackBerry smartphones and save money.

With Google Apps Connector for BES version 1.5, large businesses can now support 500 BlackBerry devices per server, double the previous number. This lets them serve more users with fewer servers.

Small businesses get more flexibility too. The Apps Connector now supports BlackBerry Professional Software, which is designed for up to 30 BlackBerry smartphones. We've also made it possible for a single BlackBerry Enterprise Server to serve users in multiple Google Apps domains, enabling low cost hosting services to be offered by our hosting partners.

Stay tuned for more announcements from partners offering hosting services for Apps customers with BlackBerry smartphones. In the meantime, we're going to continue to make it easier for you to manage mobile devices of all types with Google Apps.

Posted by Zhengping Zuo, Software Engineer and Darrell Kuhn, Site Reliability Engineer

Happy Thanksgiving Travels: Google Maps Navigation now available for Android 1.6

A few weeks ago we launched Google Maps Navigation (Beta) as a free feature of Google Maps on Android 2.0 devices. Today we're expanding availability of Google Maps Navigation to devices running Android 1.6 (Donut) and higher, such as the T-Mobile myTouch 3G and the G1.

A few weeks ago we launched Google Maps Navigation (Beta) as a free feature of Google Maps on Android 2.0 devices. Today we're expanding availability of Google Maps Navigation to devices running Android 1.6 (Donut) and higher, such as the T-Mobile myTouch 3G and the G1.Google Maps Navigation is an internet-connected GPS system with voice guidance and automatic rerouting, all running on your mobile phone. Using Google services over your phone's data connection brings important benefits to GPS navigation users, like using Google search (typed or spoken) to enter your destination; fresh map, business, and traffic data; and satellite and Street View imagery along your route.

This release also includes the new Layers feature, which lets you overlay geographical information on the map. View My Maps, transit lines, Wikipedia articles about places, and more.

So if you're traveling this Thanksgiving, you'll be able to enjoy the benefits of an internet connection, whether it's free Wi-Fi at the airport or Google Maps Navigation in your car.

If you have a phone running Android 1.6, you can download an updated version of Google Maps from Android Market to use Navigation today. Google Maps Navigation is in beta and is currently available in the United States. Some features of Android 2.0 are not available on Android 1.6, for example, the ability to use the "navigate to" voice command as shown in our demo video. However, you can still create a shortcut that will allow you to launch Navigation and start getting directions to a specific place from your current location with just a single touch from your home screen. For example, you can create a "Home" shortcut to quickly navigate home, no matter where you are. Just use the "Add" menu item from the home screen, then choose "Shortcuts", then "Directions." Please visit our forum to give us feedback, or our Help Center to get help using Google Maps Navigation.

Posted by Michael Siliski, Product Manager

Get mobile coupons through Local Search

Since we launched printable coupons on Google Maps a few years ago, people are increasingly using their mobile phones to find local information when they're away from a computer. With more of you going mobile to search for this information, it makes sense for coupons to go mobile too.

Since we launched printable coupons on Google Maps a few years ago, people are increasingly using their mobile phones to find local information when they're away from a computer. With more of you going mobile to search for this information, it makes sense for coupons to go mobile too.So just in time for the holidays, we've made it easier to find discounts when you're on the go. If a business adds a mobile coupon to its Google Local Business Center listing, you'll be able to access it from your mobile device. Just go to google.com on your phone and search for a local business. When you land on its Place Page, you'll see any coupons or discounts that might be available. Then simply show the participating business the coupon, right from your phone, to redeem the offer.

We hope you find these mobile coupons useful and that they help you save money, trees (fewer printed coupons), and your hands (from paper cuts) when you're on the go. Mobile coupons are currently only available in the US. For more information check out the Lat Long Blog.

Posted by Alex Gawley, Product Manager

New Google News for mobile

This new version provides the same richness and personalization on your phone as Google News provides on desktop. Our new homepage displays more stories, sources, and images while keeping a familiar look and feel. Also, you can now reach your favorite sections, discover new ones, find articles and play videos in fewer clicks. If you are an existing Google News reader on desktop, you will find that all of your personalizations are honored in this mobile version too.

Google News for mobile is now available in 29 languages and 70 editions.

So pick up your mobile phone and point your browser to http://news.google.com to catch up on news anytime and anywhere. Feel free to check out more information or leave feedback in our Help Center.

Posted by Ankit "Chunky" Gupta and Alok Goel, Mobile News Team

An update to Google Earth for the iPhone

Just over one year ago, we unveiled Google Earth for the iPhone and iPod touch. Google Earth quickly became one of the most popular applications in the App Store, and after only six months, was the second most-downloaded free application overall. A big thank-you to the over 220,000 users have taken the time to write a review!

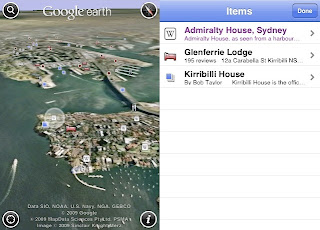

Today, we're proud to announce version 2.0 of Google Earth for iPhone. We've added some exciting new features, including the ability to view maps that you create on your desktop computer right from your iPhone, explore the app in new languages, and improved icon selection and performance.

View your maps wherever you go

Have you ever wanted to view a custom map with Google Earth on your iPhone? Well, now you can. By logging in directly to your Google Maps account, you can view the same maps that you or others have created, using the My Maps interface. Maybe you're on a trip and want to see where Tony Wheeler, the co-founder of Lonely Planet, most likes to travel. Or perhaps you're walking around looking for a restaurant and you want to see where world-famous chef Ferran Adrià likes to eat. All you have to do is click "Save to My Maps", open Earth on the iPhone, log in with the same account information, and voilà, you have your same collection of My Maps right in your pocket.

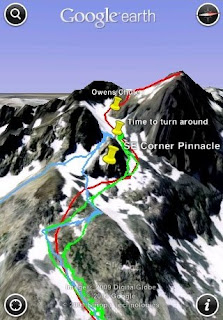

It's fun to create and view your own maps as well. Here's an example of a map that I created that shows the two attempts my friends and I made to summit Mount Ritter in the Sierra Nevada mountain range. As you can see, we didn't quite make it (the red line is the intended route, and the blue and green lines are our 2008 and 2009 attempts, respectively). Next year we'll get it for sure! I created this map by using the desktop version of Google Earth to read the tracks directly out of my GPS device, saving the resulting tracks as a KML file, and then importing into My Maps in Google Maps. You can learn more about My Maps here.

We hope you enjoy our latest release. Please note that the app will be rolling out around the world over the next twenty-four hours - if you don't see it immediately, be sure to check back soon. You can download Google Earth for iPhone here.

Posted by Peter Birch, Product Manager, Google Earth

Integrating Application with Intents

Written in collaboration with Michael Burton, Mob.ly; Ivan Mitrovic, uLocate; and Josh Garnier, OpenTable.

OpenTable, uLocate, and Mob.ly worked together to create a great user experience on Android. We saw an opportunity to enable WHERE and GoodFood users to make reservations on OpenTable easily and seamlessly. This is a situation where everyone wins — OpenTable gets more traffic, WHERE and GoodFood gain functionality to make their applications stickier, and users benefit because they can make reservations with only a few taps of a finger. We were able to achieve this deep integration between our applications by using Android's Intent mechanism. Intents are perhaps one of Android's coolest, most unique, and under-appreciated features. Here's how we exploited them to compose a new user experience from parts each of us have.

Designing

One of the first steps is to design your Intent interface, or API. The main public Intent that OpenTable exposes is the RESERVE Intent, which lets you make a reservation at a specific restaurant and optionally specify the date, time, and party size.

Hereʼs an example of how to make a reservation using the RESERVE Intent:

startActivity(new Intent("com.opentable.action.RESERVE",

Uri.parse("reserve://opentable.com/2947?partySize=3")));Our objective was to make it simple and clear to the developer using the Intent. So how did we decide what it would look like?

First, we needed an Action. We considered using Intent.ACTION_VIEW, but decided this didn't map well to making a reservation, so we made up a new action. Following the conventions of the Android platform (roughly <package-name>.action.<action-name>), we chose "com.opentable.action.RESERVE". Actions really are just strings, so it's important to namespace them. Not all applications will need to define their own actions. In fact, common actions such as Intent.ACTION_VIEW (aka "android.intent.action.VIEW") are often a better choice if youʼre not doing something unusual.

Next we needed to determine how data would be sent in our Intent. We decided to have the data encoded in a URI, although you might choose to receive your data as a collection of items in the Intent's data Bundle. We used a scheme of "reserve:" to be consistent with our action. We then put our domain authority and the restaurant ID into the URI path since it was required, and we shunted off all of the other, optional inputs to URI query parameters.

Exposing

Once we knew what we wanted the Intent to look like, we needed to register the Intent with the system so Android would know to start up the OpenTable application. This is done by inserting an Intent filter into the appropriate Activity declaration in AndroidManifest.xml:

<activity android:name=".activity.Splash" ... >

...

<intent-filter>

<action android:name="com.opentable.action.RESERVE"/>

<category android:name="android.intent.category.DEFAULT" />

<data android:scheme="reserve" android:host="opentable.com"/>

</intent-filter>

...

</activity>

In our case, we wanted users to see a brief OpenTable splash screen as we loaded up details about their restaurant selection, so we put the Intent Filter in the splash Activity definition. We set our category to be DEFAULT. This will ensure our application is launched without asking the user what application to use, as long as no other Activities also list themselves as default for this action.

Notice that things like the URI query parameter ("partySize" in our example) are not specified by the Intent filter. This is why documentation is key when defining your Intents, which weʼll talk about a bit later.

Processing

Now the only thing left to do was write the code to handle the intent.

protected void onCreate(Bundle savedInstanceState) {

super.onCreate(savedInstanceState);

final Uri uri;

final int restaurantId;

try {

uri = getIntent().getData();

restaurantId = Integer.parseInt( uri.getPathSegments().get(0));

} catch(Exception e) {

// Restaurant ID is required

Log.e(e);

startActivity( FindTable.start(FindTablePublic.this));

finish();

return;

}

final String partySize = uri.getQueryParameter("partySize");

...

}Although this is not quite all the code, you get the idea. The hardest part here was the error handling. OpenTable wanted to be able to gracefully handle erroneous Intents that might be sent by partner applications, so if we have any problem parsing the restaurant ID, we pass the user off to another Activity where they can find the restaurant manually. It's important to verify the input just as you would in a desktop or web application to protect against injection attacks that might harm your app or your users.

Calling and Handling Uncertainty with Grace

Actually invoking the target application from within the requester is quite straight-forward, but there are a few cases we need to handle. What if OpenTable isn't installed? What if WHERE or GoodFood doesn't know the restaurant ID?

| Restaurant ID known | Restaurant ID unknown | |

| User has OpenTable | Call OpenTable Intent | Don't show reserve button |

| User doesn't have OpenTable | Call Market Intent | Don't show reserve button |

You'll probably wish to work with your partner to decide exactly what to do if the user doesn't have the target application installed. In this case, we decided we would take the user to Android Market to download OpenTable if s/he wished to do so.

public void showReserveButton() {

// setup the Intent to call OpenTable

Uri reserveUri = Uri.parse(String.format( "reserve://opentable.com/%s?refId=5449",

opentableId));

Intent opentableIntent = new Intent("com.opentable.action.RESERVE", reserveUri);

// setup the Intent to deep link into Android Market

Uri marketUri = Uri.parse("market://search?q=pname:com.opentable");

Intent marketIntent = new Intent(Intent.ACTION_VIEW).setData(marketUri);

opentableButton.setVisibility(opentableId > 0 ? View.VISIBLE : View.GONE);

opentableButton.setOnClickListener(new Button.OnClickListener() {

public void onClick(View v) {

PackageManager pm = getPackageManager();

startActivity(pm.queryIntentActivities(opentableIntent, 0).size() == 0 ?

opentableIntent : marketIntent);

}

});

}In the case where the ID for the restaurant is unavailable, whether because they don't take reservations or they aren't part of the OpenTable network, we simply hide the reserve button.

Publishing the Intent Specification

Now that all the technical work is done, how can you get other developers to use your Intent-based API besides 1:1 outreach? The answer is simple: publish documentation on your website. This makes it more likely that other applications will link to your functionality and also makes your application available to a wider community than you might otherwise reach.

If there's an application that you'd like to tap into that doesn't have any published information, try contacting the developer. It's often in their best interest to encourage third parties to use their APIs, and if they already have an API sitting around, it might be simple to get you the documentation for it.

Summary

It's really just this simple. Now when any of us is in a new city or just around the neighborhood its easy to check which place is the new hot spot and immediately grab an available table. Its great to not need to find a restaurant in one application, launch OpenTable to see if there's a table, find out there isn't, launch the first application again, and on and on. We hope you'll find this write-up useful as you develop your own public intents and that you'll consider sharing them with the greater Android community.